Key Capabilities

Detect and mitigate bots and LLM agents that scrape your content, pricing, and data – ensuring your content stays secure and your business stays in control.

Pre-filter Bots Before They Land on Your Site

Block scraping bots on the first request, before they can access a single page. An invisible challenge weeds out bots at the edge, no interaction from the end user required.

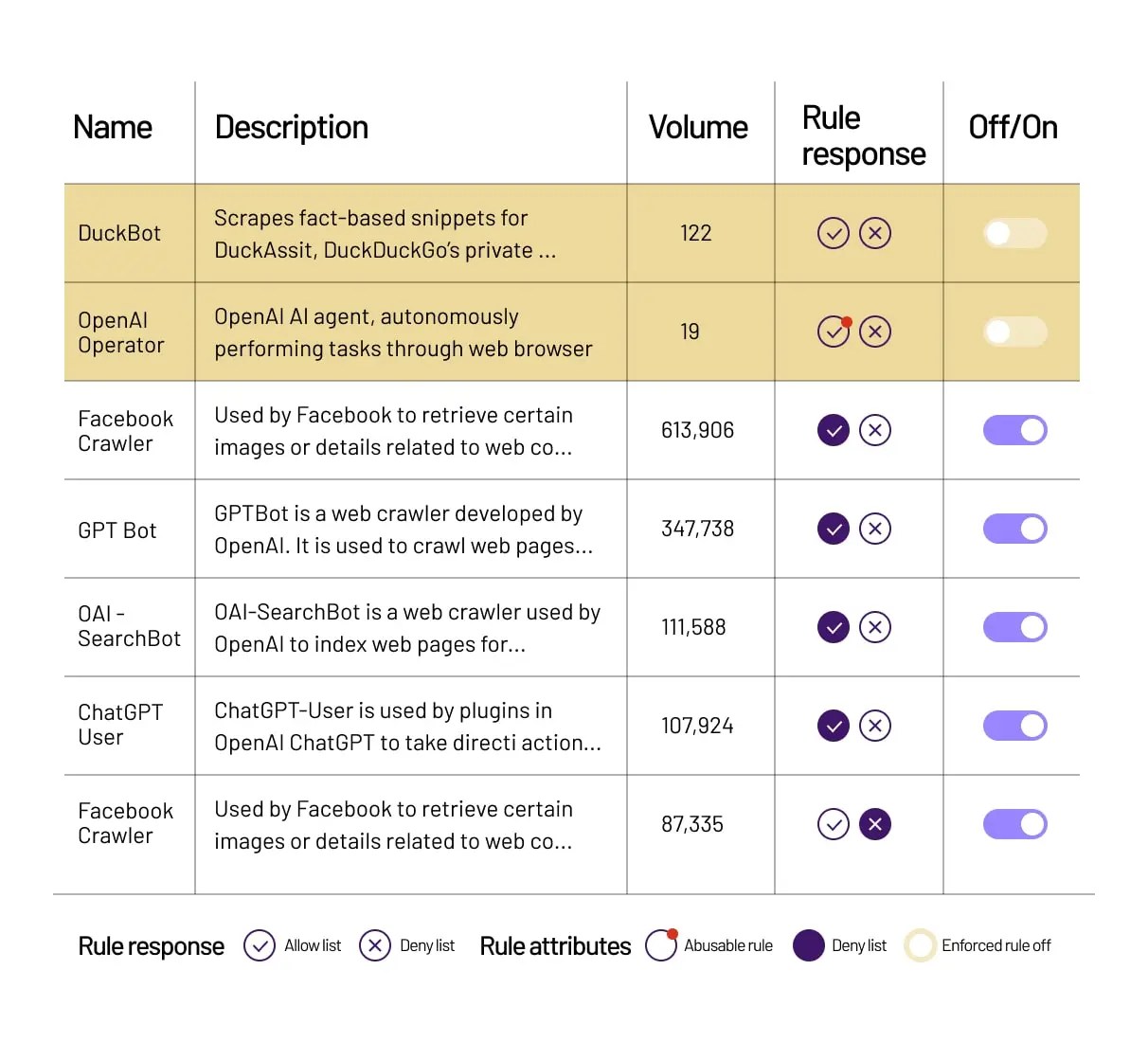

Monitor and Control Known Bots and LLM Scrapers

Get visibility into LLM scrapers and known bot activity. Choose to allow, deny, monetize, suppress ads, or show alternate content.

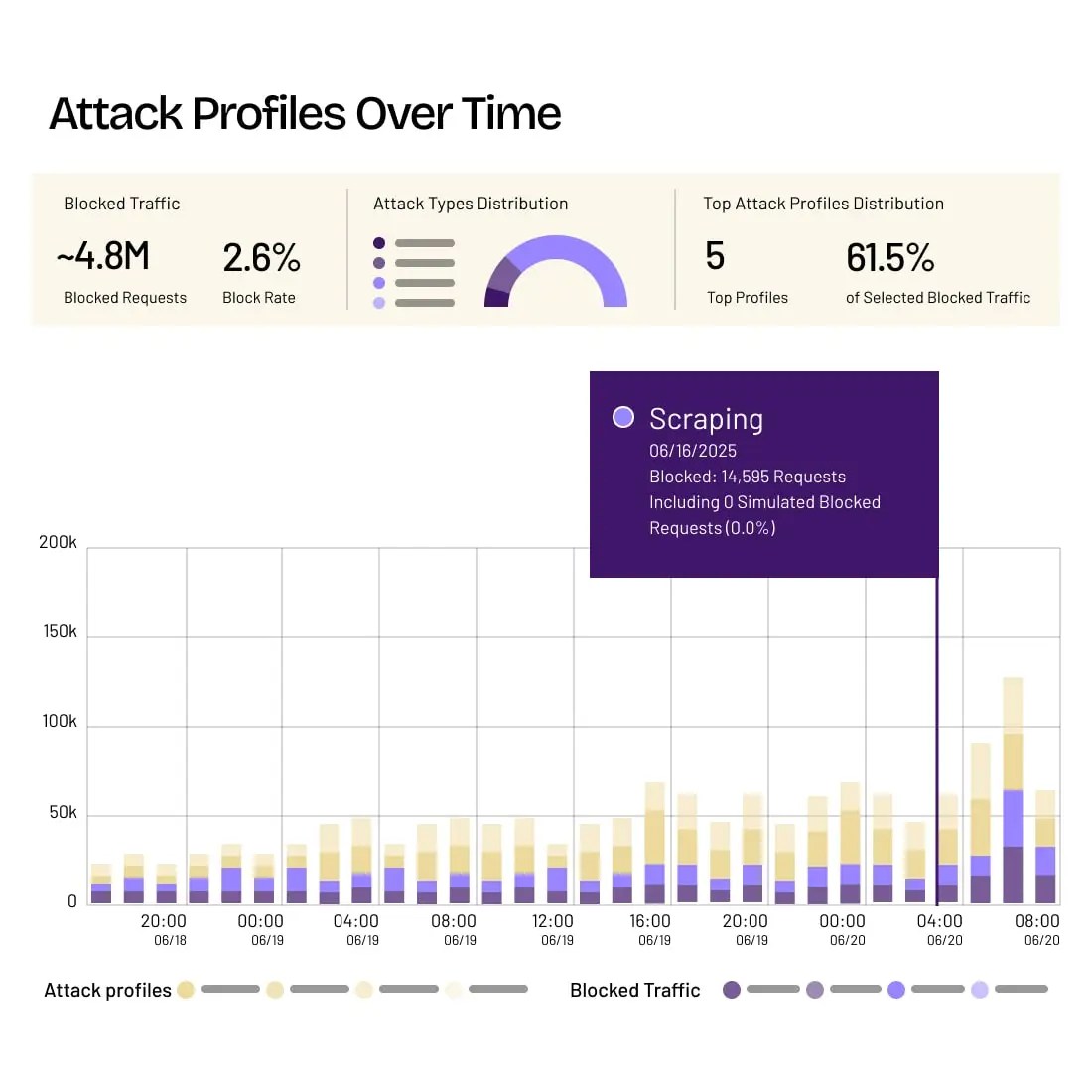

Get Granular Insights Into Scraping Activity

Define threat priorities and quickly respond to evolving risks. Pinpoint bot attack paths and changing behaviors, and gain detailed clarity into attackers’ actions and intent.

Protecting Customers from Evolving Risks

HUMAN is trusted by global organizations across industries to protect against scraping by sophisticated bots and LLM agents.

Threat Intelligence:

The HUMAN Element

The Satori Threat Intelligence and Research Team uncovers, analyzes, and disrupts cyberthreats and fraud schemes that undermine trust across the digital landscape, delivering cutting-edge research that strengthens protection for customers and thwarts bad actors for a safer internet.

Explore Satori

Stop Scraping with HUMAN

Protect against content, price, and data scraping by sophisticated bots and LLM agents with HUMAN Sightline.

Learn More

FAQ

What is web scraping?

Web scraping is when bots automatically pull large amounts of data from websites, things like pricing, product details, or proprietary content, often without permission. It can hurt businesses by giving competitors an unfair edge or by overloading servers.

How does web scraping work?

Web scraping works by using bots that visit web pages, extract data from the HTML or APIs, and store that information for unauthorized use. These bots can mimic human browsing behavior to evade detection, making it difficult for basic security measures to catch them. Advanced anti scraping solutions are essential to identify these subtle patterns and stop data theft in real time.

How do you prevent web scraping?

To prevent web scraping, businesses need to implement sophisticated defenses like anti scraping technology, behavioral analysis, and bot mitigation tools. This includes analyzing user interactions, monitoring for unusual activity, and blocking suspicious requests before data can be extracted. HUMAN’s web scraping protection ensures that sensitive information stays secure while maintaining access for legitimate users.

How does HUMAN protect my site from scraping?

HUMAN stops scrapers by detecting automation patterns bots can’t hide, things like impossible browsing speeds or unusual traffic sources. Our anti scraping technology keeps your site secure and your data out of the wrong hands.

Can HUMAN stop other types of bots?

Yes. In addition to anti scraping capabilities, HUMAN defends against a wide range of automated threats, including credential stuffing, inventory hoarding, fake account creation, and ad fraud. Our platform is designed to protect the full digital journey from all types of malicious bot traffic.

Request a Demo