In the process of creating and summarizing content, large language models (LLMs) and generative AI platforms rely on extensive automated scraping from entities across the web. For publishers and other content-driven digital platforms, scraping by AI agents is often unwanted at best, and copyright infringement at worst.

Understanding AI-driven scraping bots

Content-driven platforms face several types of unauthorized scraping by AI agents:

AI agents scraping content to summarize a response.

AI agents use bots to scrape web content to return a response to user queries. For example, you could ask the LLM ChatGPT “What is the latest news in my area?” and it will perform a search, scrape the results, and return a summary—all without the user ever having to visit the source application. In addition to potentially taking that information without permission, sometimes the provided summary is taken out of context or is factually incorrect, which compounds the problems associated with content scraping, as now misinformation is added to the mix.

Scraping of content to train LLMs.

LLMs are essentially a giant knowledge base, and they can grow only by being fed more information. AI developers sometimes use scraping bots to capture information from websites to feed the beast. Responsible LLMs will identify themselves, allowing organizations to decide whether to allow or block them. The challenge arises with AI agents that may not declare themselves or actively try to obfuscate their intention to scrape information. Recent articles have shown that some new AI players are scraping even more aggressively while ignoring robots.txt (a file that contains instructions for bots on which pages they can and cannot access).

Performance impact.

Large-scale scraping can also negatively impact web application and site performance. This can result in a poor experience for users, loss of revenue or, in extreme cases, denial of service. Scraping bots can also boost invalid traffic (IVT) rates by viewing ads as they crawl an application, devaluing your ad space.

The challenges of using robots.txt to manage AI-driven scrapers

The robots.txt file has long been a standard for managing web crawlers, but its limitations are increasingly evident with AI-driven scraping bots. As mentioned above, many LLMs and their scrapers do not identify themselves, making it hard for website operators to block them effectively. Others may change their user agents frequently as they update scrapers, which can inadvertently cause headaches in blocking their access.

The robots.txt file’s 500 KB size limit also poses challenges for organizations managing complex content ecosystems, especially as the number of AI scrapers grows. Manual updates to accommodate every bot quickly become untenable.

For large crawlers like Googlebot and Bingbot, there is currently no way to differentiate between data used for traditional search engine indexing—where publishers and search engines have an implicit “agreement” based on citations to the original source—and data used to train or power generative AI products. This lack of granularity forces publishers into a difficult position, where blocking Googlebot or Bingbot entirely to prevent data usage for generative AI also hurts search engine results.

How HUMAN helps

HUMAN makes it easy to identify and manage AI-related traffic. Customers can choose from three primary response options:

Block all LLM bots by default

HUMAN blocks LLMs by default to ensure that Publishers are protected from unwanted scraping and theft of their proprietary content. HUMAN’s industry-leading decision engine uses advanced machine learning, behavioral analysis, and intelligent fingerprinting to block scraping bot traffic at the edge, often before they can access a single page. HUMAN will allow bots to access your application only if you have specifically allowed them to do so.

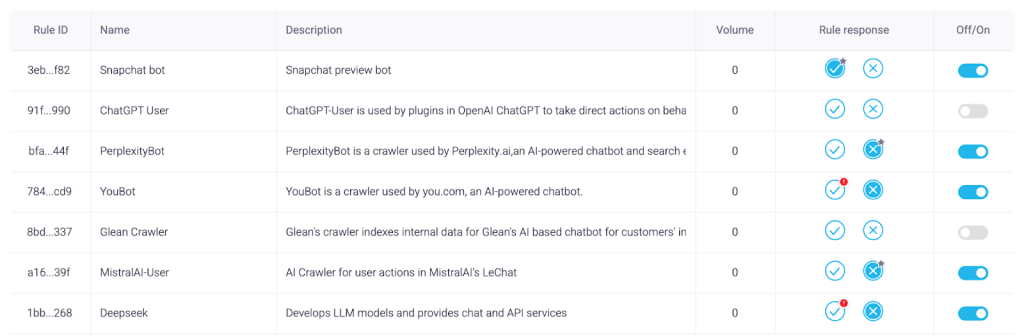

Allow known AI bots and crawlers

Customers can choose to allow trusted bots to access their content unimpeded. With an easy Off/On toggle, users can easily make quick decisions on allowing or blocking AI traffic. This adds a layer of enforcement to your robots.txt file, which is sometimes ignored by LLM scraping agents. If you choose to allow the scrapers, you can also set up custom policies to suppress ads or show alternative content.

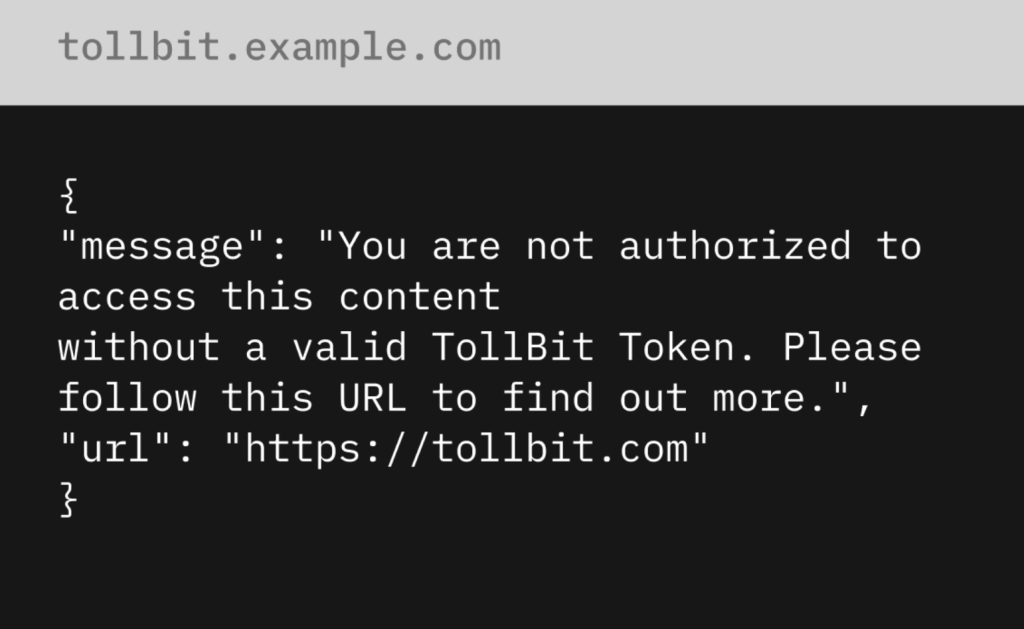

Monetize LLMs on a per-use basis

If an AI bot is detected that is not on the customer’s allow list, HUMAN allows the option to send that bot to TollBit’s scraping paywall. There, you can set and enforce payment policies that require bots to pay per scrape. This enables publishers to prevent unauthorized AI agents from scraping their proprietary content unless they provide fair compensation.

HUMAN provides visibility into AI bots and agents

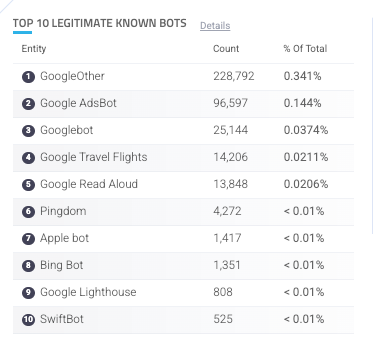

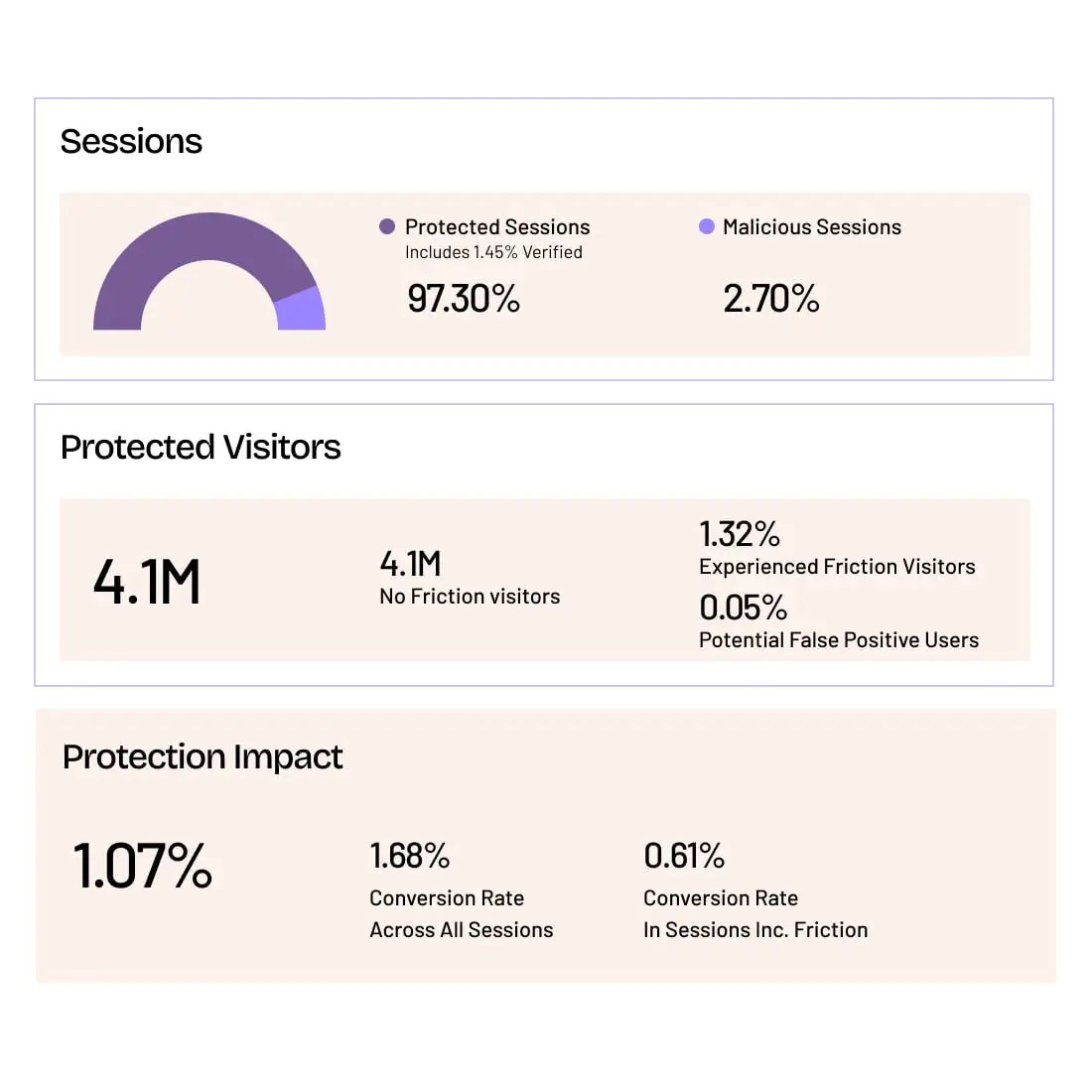

Traffic from known bots, crawlers, and AI agents is automatically highlighted in HUMAN activity dashboards, and the system notifies you if new bots are present on your applications. This makes it easy for organizations to understand the volume of traffic hitting their applications and websites, as well as a granular breakdown of which bots are contributing to the traffic, and to what degree.

HUMAN also surfaces information about which bots and AI agents are accessing your content and what paths they are targeting. This allows publishers to monitor impacts and make informed decisions to protect your assets.

Effective strategies to combat AI-powered scraping

Keeping an up-to-date robots.txt file is a strong first step to manage legitimate bots and crawlers—but robots.txt alone is no longer enough. Organizations need granular visibility into AI scraping activity and complete control to respond in a way that works for their unique business.

With HUMAN Cyberfraud Defense, you can see exactly how much AI scraping is hitting your site.

Request a free demo and see:

Grow with Confidence

HUMAN Sightline protects every touchpoint in your customer journey. Stop bots and abuse while keeping real users flowing through without added friction.