Navigating Agentic AI Security: Understanding OWASP Threats and Enabling Authentic & Trusted Interactions

Read time: 12 minutesTomer Elias

The artificial intelligence landscape is rapidly evolving and constantly pushing the boundaries of what automated systems can achieve. Today, we’ve moved beyond static programs and even conversational chatbots to the era of Agentic AI. These sophisticated systems are capable of autonomous decision-making, planning, and interacting with complex environments and tools to achieve goals without constant human guidance. This evolution isn’t just technical: it will bring profound changes in how businesses will operate, compete, and interact with customers in a world increasingly shaped by autonomous AI agents.

The Agentic AI Opportunity

Businesses are embracing agentic AI for its transformative potential to enhance efficiency and revolutionize the customer journey, particularly in B2C sectors. By enabling personalized assistance and automating complex interactions, businesses can unlock new opportunities through agentic commerce. The potential impact of agentic AI is immense; According to Gartner®, “by 2035, 80% of internet traffic could be driven by AI Agents.”1

This shift demands a new posture: not all bots are threats. Success in the agentic era will depend on the ability to verify and trust automated agents, not simply block them.

Emerging Security Challenges

However, this increased autonomy and capability also introduces a new wave of cybersecurity challenges, which must be taken seriously. As AI agents operate more independently and interact with critical systems, they become potential targets and vectors for novel forms of attack. Recognizing these risks, the Open Web Application Security Project (OWASP) launched the Agentic Security Initiative to identify emerging cybersecurity threats posed by AI agents and propose mitigation strategies. You can read OWASP’s full report on Agentic AI Threats and MItigations here.

While OWASP’s guidance is primarily aimed at agent developers to help them build more secure agents, recognizing these threats is crucial for organizations experiencing traffic from external agents–particularly those considering enabling agentic commerce where third-party AI agents make automated transactions–due to the potential security and operational risks introduced by untrusted, over-privileged, or hallucinating AI agents.

Building Digital Trust in the Agentic Era

To confidently capitalize on the opportunities presented by agentic AI and enable seamless, automated interactions despite these risks, businesses require a foundation of digital trust.

HUMAN helps organizations interacting with external AI agents gain visibility, trust, and control over that traffic, confidently enabling legitimate agents for business growth while proactively identifying and defending against malicious ones.

In this blog, we’ll explore the emerging security threats identified by OWASP in the context of Agentic AI systems and how HUMAN provides a critical layer of visibility for organizations engaging with agentic AI to enable agentic commerce, even when those threats originate from external, third-party-developed AI agents.

What is Agentic AI?

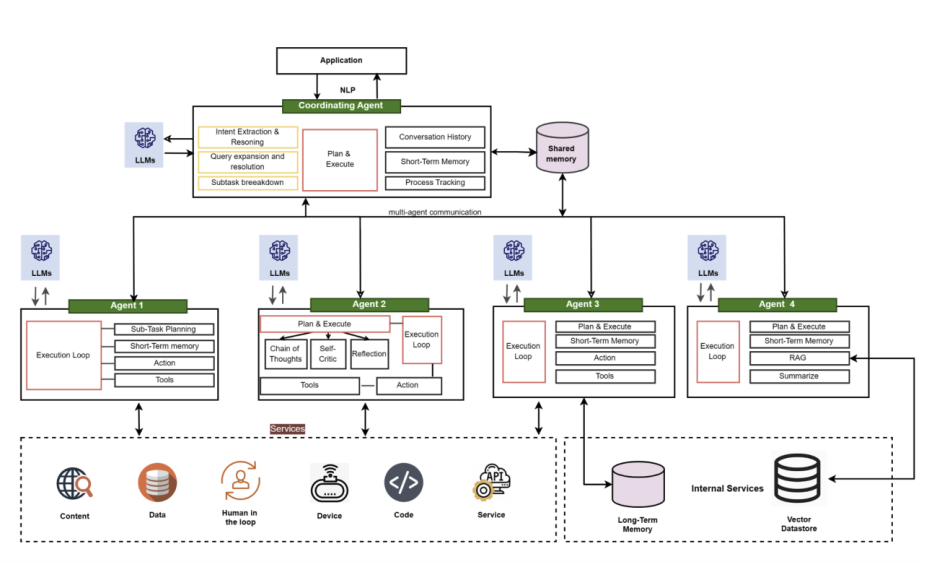

Agentic AI refers to AI systems designed to operate with a degree of autonomy. Unlike traditional bots that follow rigid, predefined scripts, agentic systems are built to understand a high-level goal and then independently plan, make decisions, and take actions to achieve that goal, often acting on a user’s behalf without requiring their constant intervention in the process. These systems are typically characterized by a cycle of perception, decision-making (or reasoning), and autonomous action.

Effectively executing this cycle relies on several core capabilities:

- Perception: The ability to receive and interpret information from their environment or inputs.

- Planning and reasoning: Processing perceived information, understanding goals, evaluating situations, and determining the steps necessary to achieve objectives. Agentic AI often leverages large language models (LLMs) as the “brain” for this reasoning and planning.

- Memory/Statefulness: Retaining and recalling information from past interactions or steps to maintain context and learn over time.

- Autonomous decision-making: The ability to evaluate situations and choose courses of action without constant human intervention.

- Action and tool use: The ability to perform tasks, interact with external systems (like web browsers, calendars, or business applications) via APIs and protocols such as model context protocol (MCP), and use various tools to effect change in their environment.

This represents an evolution from earlier AI applications. While traditional LLMs excelled at generating text, systems like Computer-Using Agents (CUAs), including early examples like OpenAI’s Operator, began pushing the boundaries by autonomously interacting with computer environments. Agentic AI builds on this, orchestrating more complex workflows and adapting in real-time.

Agentic systems can operate as single agents focused on specific tasks or within multi-agent architectures, where multiple agents collaborate and communicate to achieve more complex objectives.

Key use cases emerging or anticipated for Agentic AI span numerous sectors:

- Shopping and transaction automation: Agents that can find products, compare prices, and complete purchases autonomously. Examples include platforms and agents designed to automate research, comparisons, and making a purchase on behalf of users. Specific examples include Perplexity Shopping and Amazon’s “Buy for Me.”

- Reservation and booking assistance: Systems that can handle scheduling appointments, booking flights, or making reservations across various services.

- Browser and computer automation agents: Agents capable of navigating digital environments, filling out forms, and executing tasks within software applications. This includes solutions that control desktop environments or navigate web interfaces to complete tasks like product selection, checkout, and payment processes. Examples include tools like OpenAI’s Operator and Microsoft’s Copilot Actions.

Understanding the Risks: OWASP’s Agentic AI Threat Landscape

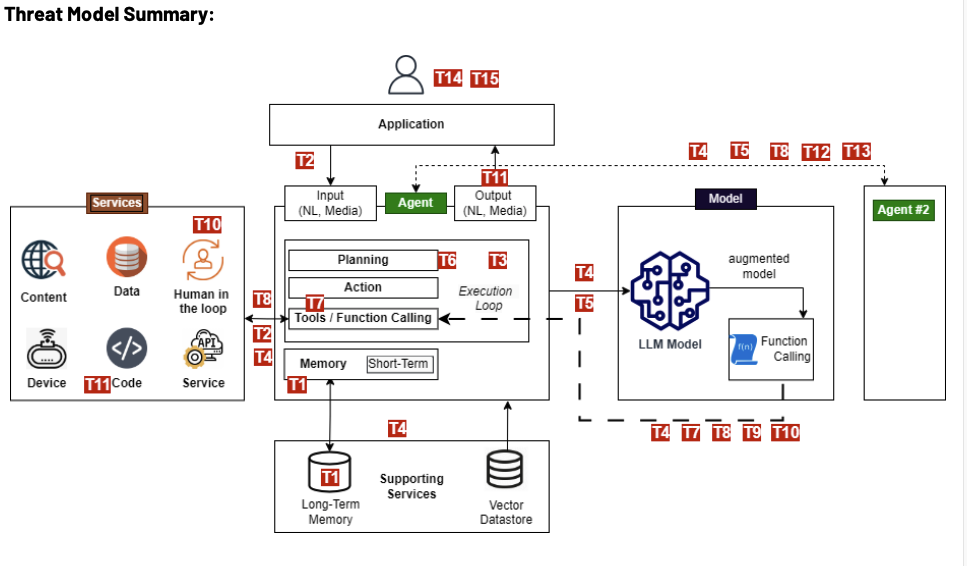

OWASP has identified a comprehensive range of 15 potential threats that organizations engaging with agentic systems should be aware of. While OWASP’s guidance is primarily aimed at helping AI developers implement security controls during development, organizations that interact with external agentic systems need to be aware of these potential threats due to the risks they could introduce at the point of interaction.

These threats go beyond traditional application security concerns, specifically targeting the novel components and behaviors of AI agents, such as their autonomy, use of memory, interaction with tools, and communication with other agents or humans.

The nature of these risks can be broadly grouped: some target the agent’s internal reasoning and memory, attempting to poison the information it uses or manipulate its goals. Others exploit the agent’s ability to use tools and execute actions, potentially leading to unauthorized operations or resource abuse. Still others focus on authentication and identity, enabling impersonation, leveraging the human interaction element, overwhelming oversight, or manipulating users. Finally, complex threats emerge in multi-agent systems, involving poisoned communication or rogue agent behavior. The full list of OWASP Agentic AI Threats is as follows:

Memory Poisoning (T1): Attackers exploit an AI’s memory to introduce malicious or false data, altering decision-making and potentially leading to unauthorized operations.

Tool Misuse (T2): Attackers manipulate AI agents into abusing their integrated tools through deceptive prompts or commands to perform unintended actions.

Privilege Compromise (T3): Weaknesses in permission management are exploited to perform unauthorized actions, often involving dynamic role inheritance or misconfigurations.

Resource Overload (T4): Computational, memory, or service capacities of AI systems are targeted to degrade performance or cause failures.

Cascading Hallucination Attacks (T5): False information generated by an AI propagates through systems, disrupting decision-making and potentially affecting tool use.

Intent Breaking & Goal Manipulation (T6): Vulnerabilities in an AI agent’s planning are exploited to manipulate or redirect its objectives and reasoning.

Misaligned & Deceptive Behaviors (T7): AI agents execute harmful or disallowed actions by exploiting reasoning to meet objectives in a deceptive manner.

Repudiation & Untraceability (T8): Actions performed by AI agents cannot be reliably traced back or accounted for due to insufficient logging or transparency.

Identity Spoofing & Impersonation (T9): Attackers exploit authentication to impersonate AI agents or human users and execute unauthorized actions under false identities.

Overwhelming Human in the Loop (T10): Systems with human oversight are targeted to exploit human cognitive limitations or compromise interaction frameworks.

Unexpected Remote Code Execution and Code Attacks (T11): AI-generated execution environments are exploited to inject malicious code or trigger unintended system behaviors.

Agent Communication Poisoning (T12): Attackers manipulate communication channels between AI agents to spread false information or disrupt workflows.

Rogue Agents in Multi-Agent Systems (T13): Malicious or compromised AI agents operate outside normal monitoring boundaries, executing unauthorized actions or exfiltrating data.

Human Attacks on Multi-Agent Systems (T14): Adversaries exploit inter-agent delegation and trust relationships to escalate privileges or manipulate AI-driven operations.

Human Manipulation (T15): Attackers exploit user trust in AI agents in direct interactions to coerce users into harmful actions or spread misinformation.

Our early research into Agentic AI “in the wild” identified behaviors that align with this threat landscape, including the potential for unwanted content scraping that impacts ad revenue, the security concerns when agents might control a user’s device or browser, and observations regarding how early agents handled standard web conventions like robots.txt.

Our intelligence team is actively monitoring agentic AI developments across vendors to detect emerging agent behaviors and provide our customers with continuous visibility and control. Since our initial research, the landscape has rapidly evolved. Future updates will deliver deeper analyses of real-world agentic activity, expanded detection methodologies, and strategies for securing interactions as agent ecosystems mature.

Addressing Agentic AI Security: A Multi-Layered Approach

Effectively defending against agentic AI threats demands a multi-layered security posture. This means implementing controls at multiple levels: developers building AI agents must incorporate security within the agent’s design and code, platforms hosting agents need to provide secure execution environments, and critically, defenses are needed at the points where AI agents interact with external systems and human users.

The OWASP Agentic Security Initiative has outlined various mitigation strategies. Their work provides valuable guidance for agent developers on how to build more secure agents, emphasizing that securing the agentic AI landscape is not a single-point solution but requires a comprehensive approach across the ecosystem.

OWASP’s mitigation strategies cover several key areas: they recommend implementing strict access control policies and granular permissions to limit which tools and data agents can access, as well as monitoring and behavioral profiling aimed at detecting anomalies in agent activity that could signal manipulation or rogue behavior. Validating agent inputs and outputs and securing communication channels between agents and other systems are also recommended as best practices to prevent poisoning and deceptive actions.

HUMAN’s Role: Gaining Visibility, Trust, and Control in the Agentic AI Era

In the rapidly evolving landscape of Agentic AI and agentic commerce, organizations need to gain visibility, trust, and control over the automated traffic interacting with their digital assets along the customer journey. This is what HUMAN provides, acting as a layer of trust and security for businesses whose systems and applications are being interacted with by external, third-party AI agents.

Visibility: HUMAN gives you deep insight into who (or what) is acting on your platform, why, and how. By analyzing over 20 trillion interactions across billions of devices weekly, we gain comprehensive visibility into traffic patterns from bots, humans, and AI agents. Our technology accurately identifies and fingerprints different types of agentic traffic, providing the granular understanding you need.

Trust: Building trust in agent interactions requires high-fidelity decisioning. Leveraging 2,500+ signals per interaction and 400+ AI/ML models, HUMAN can adaptively determine the nature and intent of agentic activity. This allows businesses to differentiate between legitimate agents carrying out desired tasks and malicious or unwanted agents attempting misuse or fraud. This level of analysis is key to establishing trust in automated interactions.

Control: With Visibility and Trust established, HUMAN empowers organizations with granular control over agentic traffic according to their specific business processes. This means you can confidently allow good traffic from legitimate agents, potentially rate limiting if needed to manage load, while simultaneously denying malicious or unwanted traffic that poses a risk. This control directly helps organizations minimize excessive agency by preventing rogue agents from taking unwanted or risky actions, such as modifying sensitive data or compromising user accounts.

This ability to identify, verify, and control agent interactions delivers significant business benefits:

- Boost Sales: Confidently enable legitimate AI agents to make purchases and interact with your services, unlocking new automated revenue streams through agentic commerce.

- Gain Visibility: Understand precisely how AI agents are interacting with your platform, gaining better operational and security insights.

- Minimize Excessive Agency: Prevent unintended or risky actions by controlling which agents can perform specific tasks.

- Prevent Fraud and Losses: Proactively defend against malicious AI activity, safeguarding your customers and applications from automated fraud and other harmful actions.

What makes HUMAN unique in providing this critical layer of defense? Protecting against agentic AI risk demands more than traditional bot mitigation; it requires behavioral intelligence, contextual awareness, and proven expertise. HUMAN delivers this through our ability to decode intent across humans, bots, and agents. Backed by our expert Satori threat intelligence team and trusted by hundreds of enterprises, we don’t just detect automation; we understand its purpose and behavior to protect the customer journey from inauthenticity at every interaction in the age of Agentic AI.

Navigating the Agentic Future with Confidence

The rise of Agentic AI marks a pivotal moment in digital transformation, offering immense opportunities for innovation and efficiency, particularly within agentic commerce and enhanced customer experiences. Yet, as OWASP’s work highlights, this new era of autonomous systems also introduces complex and evolving security threats that demand serious attention from the industry.

While securing the development and internal workings of AI agents is a vital part of the overall solution, organizations must also prioritize defense at the points where these external AI agents interact with their valuable systems and data.

This is where HUMAN provides a critical, enabling layer of security. By empowering businesses to gain visibility, trust, and control over the automated traffic interacting with their platforms, HUMAN allows organizations to confidently embrace the agentic future. Our expertise in accurately identifying and analyzing the intent and behavior of AI agents enables businesses to differentiate between legitimate, desired interactions and malicious or unwanted activity. This, in turn, provides the control necessary to allow beneficial traffic while effectively defending against risks like excessive agency and automated fraud.

Ultimately, navigating the agentic AI landscape successfully means being prepared. Understanding the threats is the first step; implementing the right controls at the interaction layer is essential to unlocking the opportunities safely and securely.

Ready to gain Visibility, Trust, and Control over Agentic AI interactions on your platform? Talk to a human to learn how HUMAN can help you confidently engage with the agentic era.

1Gartner, Gartner Futures Lab: The Future of Identity, 7 April 2025

GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the

U.S. and internationally and is used herein with permission. All rights reserved.