Agentic AI: The Future of Automation and Associated Risks

Read time: 9 minutesHUMAN, Satori Threat Intelligence and Research Team

Large Language Models (LLMs) like ChatGPT have transformed how we interact with AI by simulating human-like conversation, text generation, and summarization. Now, a newer class of computer-using agents (CUAs)—such as OpenAI’s Operator and Claude’s Computer Use—is pushing this paradigm further by autonomously performing tasks, making decisions, and interacting with multiple systems. Dubbed Agentic AI, this technology orchestrates complex workflows and adapts in real-time without continual human oversight.

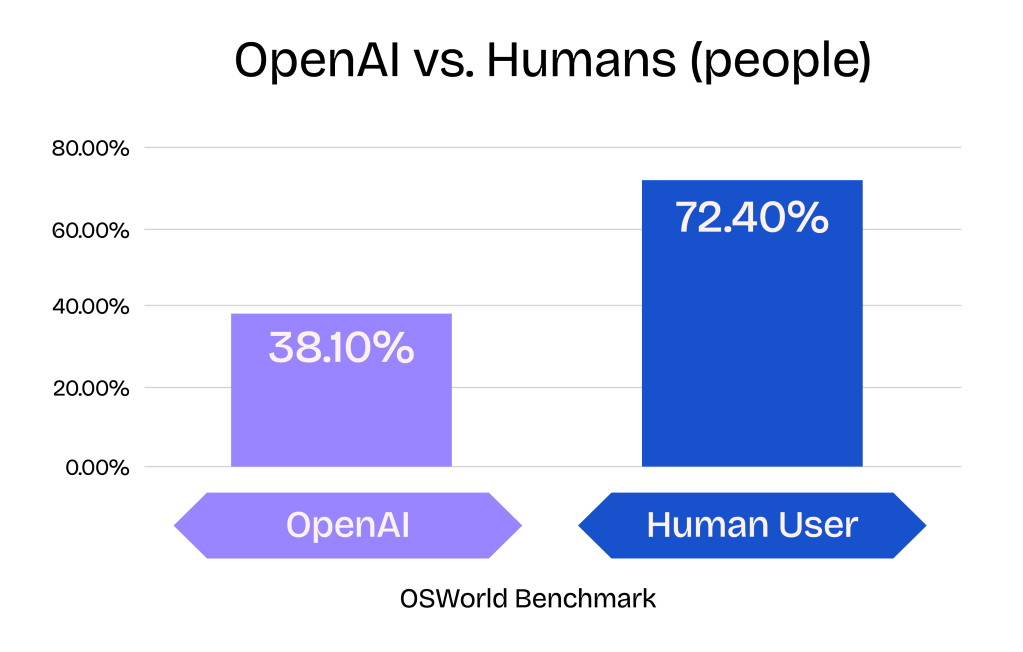

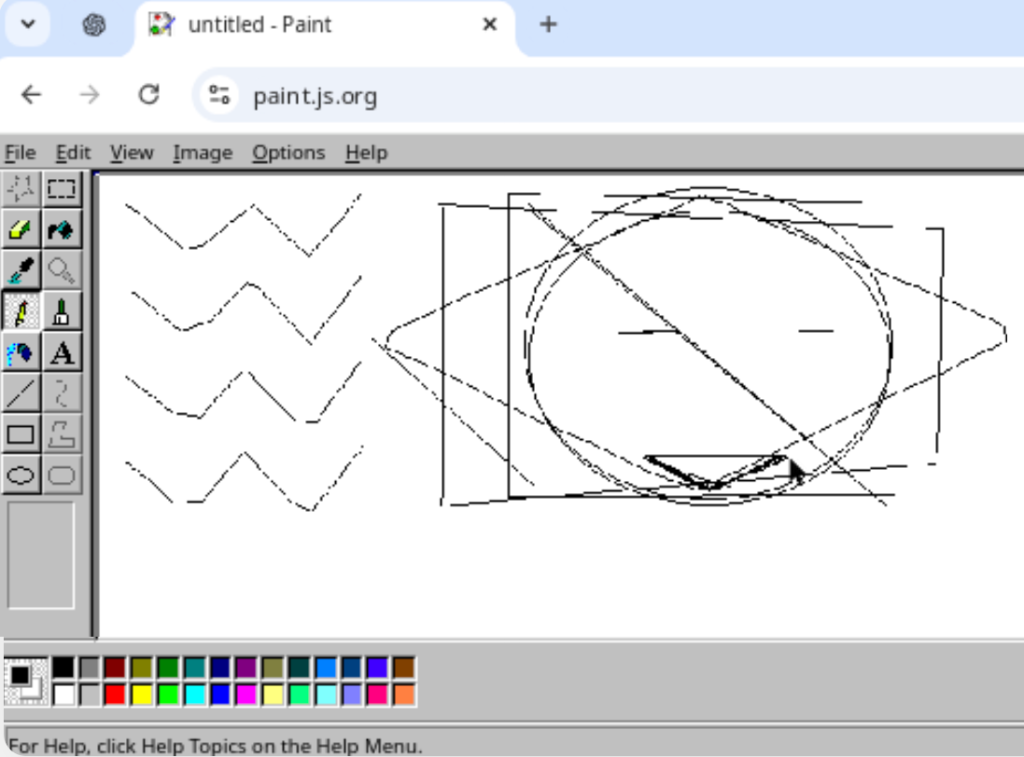

Agentic AI technology is still in the early stages, and as such, it’s currently slow and error-prone. OpenAI’s CUA achieved a success rate of just 38.1% on the OSWorld benchmark, a standard test used to evaluate how well an AI agent can interact with a full computer system (including managing applications, navigating menus, and performing multi-step actions). For comparison, human (people) performance in this benchmark is more than 70%. User reports also indicate that the agent is slow. However, we can expect CUAs’ capabilities to increase exponentially in the coming months and years—so understanding the opportunities and risks of Agentic AI is important.

What is Agentic AI?

Agentic AI refers to systems that use AI to operate with some degree of autonomy, making decisions, taking actions, and adapting over an extended period of time. Examples include proactively managing a support queue, escalating issues to the correct department, and even initiating IT maintenance tasks on their own. This is in contrast to traditional LLM chatbots that primarily generate responses to user prompts or systems that follow a predefined set of instructions.

While the term “Agentic AI” is still not completely well defined in the market, the most common use of this term refers to systems that leverage LLMs in order to attempt to achieve a user-provided goal with a given set of tools. You most likely have interacted with or seen these in the form of customer support chatbots or products promising to monitor or scrape parts of the internet in order to provide some automated insight to you. There is also the potential of using Agentic AI to complete simple tasks such as changing a flight or finding the best price for a product and then purchasing it, though these use cases are generally not mature enough for mass adoption by the market.

Although they are not categorized as Agentic AI, AI scraping agents are a related technology that collects content to train an LLM. They can also help LLMs respond to user requests, such as “summarize this site for me” or “give me the latest news in New York.”

Risks Associated with Agentic AI

LLMs and AI CUAs bring a new set of risks to enterprises. For starters, they increase the demand for and accessibility of web scraping. Non-tech-savvy people can now easily set up their own automated scraping solution to pull data or monitor web content, and then take action on that. In addition, some specific risks include:

- Content Scraping

Many popular consumer LLM chatbots, such as Perplexity and You.com, also search and retrieve online content to answer user questions. This creates an issue for websites that monetize their content via ads, as the chat agent potentially prevents the human user from visiting the source website, causing the site to lose ad revenue and diminish its brand experience. In some cases, the ads may actually be served to the agent instead, which might put the reputation of a publisher at risk due to increased rates of Invalid Traffic (IVT).

- Distributed Denial of Service (DDoS) Attacks

There are theoretical attack vectors (although proven on smaller scales) that abuse AI agents to create a flood of connections to a site, taking it down in a DDoS-style attack. In these cases, the requests will appear to come from the agent itself instead of from the actual attacker. Given the current state of these agents, we don’t believe that such threat models are practical, as their slow speed and high cost make them economically unviable techniques.

- Broad Attack Orchestration

Agentic AI can also orchestrate broader attacks against systems and the internet as a whole. A sufficiently advanced system should realistically be able to run a suite of tests or tools to check for vulnerabilities and then make subsequent decisions based on the results of those tools, emulating a process that a human attacker may take. Some startups are attempting to leverage Agentic AI to perform intelligent security testing, and it is easy to imagine attackers taking a similar approach.

- Device or Browser Control

With the introduction of AI computer-using agents, the agent may actually control an actual human’s device or browser (OpenAI Operator is operating off virtual machines in a data center). While these capabilities are largely in a pre-release state, they introduce a new set of security concerns. Organizations may want to govern and manage the access of these third-party tools. Potential risks include the agent accessing protected content (a compliance and data loss concern), as well as hallucinations that may result in the agent taking actions not intended by the end-user, such as making a purchase, performing a monetary transfer, or editing account information.

- Unintended Consequences of Site Integrations

There is the risk of having an AI Agent integrated into your own site, as the application owner. Most commonly, these are seen as chat support bots. In the near future, we are likely to see more innovations, such as personal shopping assistants integrated into a website. In these scenarios, the site owner will have some responsibility for the bot’s actions, including in cases where the agent did not act in alignment with the user’s intent. For example, last year, a Canadian court awarded damages to a customer who had been given incorrect information by an AI chatbot.

Agentic AI in Action

HUMAN’s Satori Threat Intelligence and Research team has been investigating AI scraping agents and Agentic AI “in the wild” to determine their effect on the cyberthreat landscape.

The primary immediate impact we are seeing is a rise in the demand for commercially available scraping services. This is likely partially driven by the fact that it is more efficient for firms that develop agents to outsource or contract scraping capabilities. At the same time, they continue to focus on their core offering: the agent itself. We also hypothesize that this is driven by the lower barrier to entry to setting up a scraping system (e.g. a demand gen marketer can now quite easily set up a system that scrapes content daily in order to generate content marketing ideas).

In our initial testing of AI scraping agents, such as Perplexity, we were unable to trigger an on-demand scrape against any of our honey pot sites. However, we were able to retrieve responses, indicating that the agent had access to the site content. Our logs identified many signatures of popular scraping services, leading us to believe that these products most likely outsource their data gathering. These are still preliminary results, and we will publish a more in-depth article when the research is complete.

In regards to ChatGPT Operator, the most well-known AI-powered agent, we haven’t seen much evidence of this automation (or any other completely new automated activity) across our network. In the lab, our Satori Threat Research team has identified a set of fingerprints that uniquely identify this agent. Using that fingerprint, we analyzed a sample of traffic across the HUMAN network, and detected the fingerprint in extremely low volume, accounting for under 0.00015% of the sampled traffic (less than two sessions per million). Generally, we saw only one or two sessions per domain, potentially indicating that this is just users with ChatGPT Operator experimenting with the new capabilities.

It’s interesting to note that none of these agents currently self-declare or respect robots.txt. Because they are running a real browser, they present themselves as a Chrome instance (User-Agent: Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/130.0.0.0 Safari/537.36). We strongly encourage companies developing Agents to work with vendors like HUMAN to develop standards for identifying and verifying their agentic traffic. This will improve the overall user experience and success rate for agents while preventing bad actors from impersonating agentic traffic.

To date, our threat intelligence has not revealed any signs that attackers or malicious groups currently leveraging these capabilities. While more accessible, AI-powered computer agents are less efficient than traditional techniques for creating bots at scale.

Opportunities for Organizations with Agentic AIs

AI and associated technologies, such as shopping assistants or AI-controlled browsers, present enterprises with many new risks, but they also present new opportunities. In 2024, “88% of shoppers used AI in some form during the recent holiday retail season, and 56% of shoppers said they were happier from having that extra support.” However, this statistic refers to a broader set of AI capabilities, including AI-powered recommendations.

In speaking with our customers, we have learned that many are open to allowing these technologies on their applications if they help drive business. However, they aren’t currently using them for this purpose because they are still in a very early stage and generally not ready for full enterprise use.

In the specific case of AI scraping agents, some applications are turning this into a new source of recurring revenue. Earlier this year, HUMAN announced a partnership and integration with TollBit to enable publishers to generate recurring revenue from content scraping. HUMAN’s industry-leading AI-powered decision engine detects AI agents and sends them to TollBit’s paywall. AI scrapers can discover site content through the TollBit marketplace and purchase access to that content at customized rates. HUMAN then enforces scrape-blocking, ensuring that unauthorized scrapers do not have access to their content.

How HUMAN Provides Visibility into Agentic AI

HUMAN Security provides best-in-class bot mitigation capabilities. With our unparalleled visibility into 20 trillion interactions each week, we can detect all forms of automation, including sophisticated bots and bot-controlled browsers—whether or not they are powered by AI. When bots are detected, a “press-and-hold” challenge is served, ensuring that you always keep a “human-in-the-loop.”

Using HUMAN’s platform, customers also have access to the best-in-class management of known bots and crawlers. This provides a view and access management console for all the known bots on your applications, including AI agents, and AI scraping agents empowering our customers to decide how their applications should interact with the bots.

Additionally, the Satori Threat Intelligence team is conducting ongoing research to test the newest agents and monitor them in the wild. This feedback loop fuels HUMAN’s adaptive learning and secondary detection capabilities, ensuring future-proof protections for our customers.

The full impact of agentic AI is yet to be seen. Through cutting-edge threat intelligence, extensive first-party data, and ongoing conversations with leading digital organizations, HUMAN is committed to staying at the forefront of this evolving technology. We are making significant investments in AI-driven research and development, equipping our customers with the tools they need to have full visibility and control of AI agents in 2025 and beyond.